Keep Expressing Yourself and Stay Human, Urges AI Expert and Author Brian Christian

By Tom PorterThe next time you’re trying to send a text and your phone keeps trying to “tell” you what to write, try to resist, said Brian Christian. “We must insist on saying what we mean and on sounding like ourselves.”

Christian is a world-renowned author and expert on AI and its ethical implications. His visit to campus on October 6 was part of the College’s Hastings Initiative for AI and Humanity, which aims to prepare students to lead in a world reshaped by artificial intelligence.

Speaking in a public lecture that evening, Christian urged members of the Bowdoin community to hold on to their humanity.

As an example, he described a recent occasion when he was sending a text in which he said that he felt “ill.” The AI tool powering his phone kept autocorrecting the word to “I’ll.” He was tempted to change the word to “sick” instead of “ill,” said Christian, but this would have been giving in to technology at the expense of his individuality. “There's a computer science principle here that making typical things easier necessarily makes atypical things harder,” he explained.

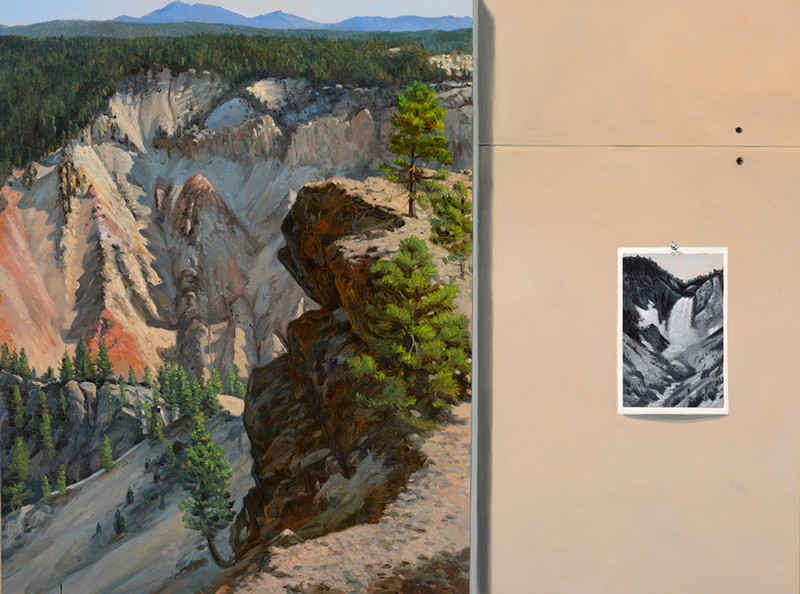

Fretting over the use of a single word may seem like a small thing, Christian told the audience, but it is not. “Your idiosyncrasy, your ability to think, to speak, to act for yourself is the source of your power. This is true as an artist, this is true as a scientist, this is true as a citizen in a democracy. It was hard enough for Picasso to come up with a new way to paint the human face,” he added, “but at least his canvas wasn't literally pushing the brush stroke into more conventional paths. Yet this is exactly where we find ourselves.”

The concerns outlined by Christian and other scholars are often described as an “alignment problem”—also the title of Christian’s latest book, which explores the ethics and safety challenges confronting the field of AI and those working in it. The problem, which scientists and researchers have been grappling with for decades, is how to ensure that technological advances are beneficial to mankind and align with the needs of humanity.

Christian began his day on campus meeting faculty members for a discussion about the issues raised in The Alignment Problem, the third book he has written on AI. “The story of my books is a story of interdisciplinary collision courses,” said Christian, who majored in computer science at Brown University and went on to pursue creative writing and poetry alongside his technological scholarship.

Advances in AI are happening so rapidly, he stressed, that the technology is effectively running away with itself, which can have serious ethical implications. Consider, for example, how AI algorithms are judged to have displayed racial and gender biases in developing tools for areas like health care and the judicial system. This, say experts, is because the datasets used by large language models are themselves skewed and do not tell the whole story. In the case of health care, for example, studies have shown algorithms often train on data that underrepresent racial minorities, which, consequently, can give an inaccurate estimation of their health care needs.

Another risk highlighted by Christian was how AI tools that are meant to be predictive can end up being generative. He gave the example of a product designed to forecast house prices for the mortgage market. It became so successful that people ended up using it to generate prices—in the same way, he said, that predictive texts can end up changing how we write and express ourselves.

“AI at this point demands a radical interdisciplinarity,” Christian told faculty members. In addition to computer science, those working in AI should consider a range of subjects, including philosophy (for ethical issues), political science (to understand regulatory and global supply chain challenges), environmental studies (to appreciate the impact the industry’s huge data storage needs will have on the environment), and English (regarding the linguistic and textual implications of AI). The list goes on.

This approach, said Christian, aligns perfectly with the liberal arts education offered at institutions like Bowdoin.

“Brian did a great job in conveying complex ideas about how these machine learning models are built using training data,” said Associate Professor of Neuroscience and Psychology Erika Nyhus, who attended the workshop. “We also talked about when these tools are useful for students and when they are not.”

The consensus, she said, was that, while AI tools can be very useful in processing data, there are tasks they cannot do.

“They cannot be relied upon to give a person expertise on a topic and should not be used to try and research subjects you don’t understand properly.” That, said Nyhus, needs to be done the old-fashioned way—through study and application.

Christian also met with students during his visit. Asked for advice on how to navigate a future AI-dominated job market, Christian pointed out to students that the industry has evolved so fast that it is difficult it is to predict exactly what kinds of technical skills will be required in the future. His message was clear: Do what inspires you and see where that leads. There doesn’t have to be a master plan.

Another key piece of advice he had for students and for young people in general is not to forget the power they wield. “This is a live situation,” Christian emphasized, “and it could go either way.” Speaking “partly tongue-in-cheek and partly seriously,” he reminded students that what they “decide is cool and not cool” can end up determining the fate of Silicon Valley companies and the influence they have on our lives: “When Meta releases a pair of glasses that has an always-on camera, you get to decide if that's cool.” And that, he said, could decide what kind of a world we end up living in.

Alma Dudas '27: “I found his take on the alignment problem really interesting, particularly how small gaps in data or poorly defined goals can lead to completely unintended outcomes. He also drew on conversations with researchers across disciplines to make those complex ideas feel real.”

Joe Gaetano '27: “The Alignment Problem completely changed the way that I think about AI and the direction it is heading. Mr. Christian was incredibly insightful in discussing the broader challenges and ideas surrounding AI and its alignment with human values.”

“Your choices, both collectively and individually, matter.”

The next Hasting Initiative event will be a Generative AI Hackathon on October 14th from 9am until 5pm. More details.