Revolutionary Tech

By Alison Bennie for Bowdoin MagazineArtificial intelligence is booming, and opinions abound about how and whether it should be used.

In a discussion with alumni from three decades who work in a world in which AI is not just impacting the workplace but forming it, changing it, and defining it, we hear loud and clear that engaging with AI, the transformative invention of this age, is not optional.

They talk about the potential of AI to remake workplaces and job quality, the imperative of learning about and engaging with this revolutionary technology, and the best mindset with which to approach this moment.

BOWDOIN: So much of the media focus is on whether artificial intelligence is a danger or an opportunity—to paraphrase Stephen Hawking, the best thing, the worst thing, or the last thing. The three of you work in this world every day and are considering all this carefully. What are some ways this technology connects to doing good?

NEAMTU: One of the reasons I’m building the technology I am [in support of home-care providers] is because it has a positive social impact. A lot of people depend on the services our providers provide. A lot of people who provide those services also depend on the paychecks and all the information that goes into the software that we build. So, it adds a very tangible purpose.

BOWDOIN: What ways do you think AI could be society-changing and outcomes-changing for people? What are the hurdles we have to get over before that could happen?

RYDER: There’s so much fear that AI is going to take over all of our work and automate everything away. What I’ve heard people say is, “You won’t lose your job to AI; you’ll lose your job to someone who knows how to use AI.” What I find interesting about that is that, the more we think about how we can use that technology ourselves—and that might be a really small effort, depending on the type of work you do or what’s important to you, or it could be something huge, like disease detection. You still need that human touch and analysis, but you can just increase the volume of what you do exponentially with AI. And that’s true of so many different industries, that you can automate things that are just tedious.

RYDER (continued): I heard an executive say recently that they have writers who have gotten really good at writing prompts for tools like ChatGPT. So, instead of just saying, “I’m losing my job because now a machine can write,” they’re reskilling themselves to become prompt writers. I think what’s interesting is that we can learn the skills we want, we can be passionate about the fields we want to be in, but if we think about how technology is kind of a coworker for us, or a support, or an additional piece that we can leverage, then we can really make an impact. It doesn’t have to be something as huge as health care and saving people from really detrimental outcomes. Even in more routine work or office work we can find some really good opportunities. But you’ve got to be willing to learn the tools now.

BOWDOIN: Does that align with what you have been finding in your work, Matt?

BEANE: No. But it’s what we should try for. The science we’ve got available predicts pretty strongly that this technology is no different from any other in that it will be used by those who use capital to accrue more capital. That’s not ascribing any intention to those people. It’s just a function of things like our tax law. Absent some big intervention, it’s just not rational to expect this technology, without other change, to distribute gains in a way that some of us might think of as more equitable.

And the technology doesn’t cause harm—that’s the big sort of mind hack we all need to work on. There’s no such thing as an effect from technology unless somebody is using it. But there are many, many choices around how it’s designed, how it’s sold, how it’s put to use. I talk about one in my TED Talk that’s very easy to get your head around, which is bomb disposal. The way we use technology there actually greatly enhances human connection, skill transfer, information gathering, the quality of bomb disposal, instead of weakening. The norm is a lot of these things get weakened, but it doesn’t have to be that way. It also doesn’t have to be that we concentrate wealth, power, or status in the hands of a few. But it would take quite a bit of effort to change it.

I try to unearth exceptions and show places where people are doing it a different way, where it worked better for everyone. What would it take to replicate that? We can and we totally should try for a “one plus one is three” solution—the problems are too big not to. But the inertia is often in the other direction.

BOWDOIN: Kate Crawford [who studies the social and political implications of AI] says she’s worried not so much that robots will become human but that human workers will be treated more like robots. What can we do to make sure we keep humanness in the workplace?

NEAMTU: That’s really interesting, “Humans are becoming more like robots.” The etymology of “robot” comes, I think, from a Polish word that means “worker.” Even without AI, you would have organizations that were thinking of people as just cogs, robots, workers, whatever—at great detriment to the humanness, to how we derive meaning.

RYDER: Our research has looked extensively at the ways humans and machines interact—we identified five modes of human-AI interaction, depending on the task at hand. We conducted a survey where a significant percentage of our respondents said they viewed AI as a coworker. So there is this weird humanization thing happening, but it’s not necessarily fear that these things are becoming humanoid and taking over what we do, but how can we work with these systems more effectively?

BOWDOIN: I think the example about human workers being treated like robots was Amazon, where you’ve got humans doing part of the work and machines doing part of the work and people feeling treated like machines—monitored differently, measured differently.

BEANE: I can speak to that, having spent hundreds of hours in warehouses in the last few years. I think that’s a good ground zero to get at this. That is a function of a low-road approach to automation and has been evident in research going back to Taylorism in the 1900s. So, that is not to do with AI, although we have data that show it’s more intense in some ways with AI. But getting improved productivity at the expense of job quality is a common tradeoff, because to preserve or enhance job quality while you implement automation is a more complex endeavor.

Pick some non-fancy automation, like a cross-feed sorter. Instead of having people carry things from one point to another, you just put it on a belt and you scan it, and then the system automatically directs that parcel to the right zip code. You turn that on, and there are fewer jobs available in that work zone because you need less handling. But the jobs that remain, unless you’re careful, become quite deadly dull. Extraordinarily repetitive. You’re separated from your coworkers. You’re trying to meet a quota, so you don’t even interact with them. And you get repetitive motion injury cropping up. And yet, your throughput and profitability take a nice bump immediately. That’s a very, very hard target to walk away from and has been since the Industrial Revolution.

Job quality, I think, is really what people are worried about in the end. The stats and the research are pretty clear that there are jobs gained and lost with automation, but the volume of change is pretty low—and societally, maybe healthy, actually, compared to change in job quality—the meaning, skill, career, pay, and dignity that can come from doing a job. You can suck the air right out of the working experience through automation. It doesn’t have to be AI.

I am particularly focused on skill because I think it bundles together a lot of those things, like meaning, feeling like the human is enhanced by working in the system rather than degraded. I know for certain from my research that there’s a way to implement automation that builds skill and as a result enhances the dignity of entry-level, minimum-wage workers all the way up to surgeons. But it is not obvious, and it is more complicated than the immediate profitability you can get from just “Buy this thing. Everyone read the training manual. Bolt it to the floor and turn it on.” We can grind people—it doesn’t have to be manual repetitive work. There’s a lot of sort of data moving, processing, especially in HR in organizations or accounting or finance, law, where you have people just repetitively doing some slightly different version of a task. And then, you put in more automation and it makes it even worse for those who remain, not better. That’s a completely optional, just totally sloppy waste of human potential.

BOWDOIN: So, if you are starting a business, what do you do? How do you create the human jobs around your business in a way that’s intentional?

NEAMTU: I think you really have to be intentional. A lot of times it’s easier to deploy technology that gets you results and you don’t design it with that human element. I think you have to really be intentional about the human element. Health care is a bit different because you always have the human element because, well, there’s the care. There’s going to be a person you’re servicing, rather than an inanimate object. So it’s going to be harder to take the human element out of it. It’s going to be hard to fully automate. In the home-care industry, people have to go into people’s homes, help them with a variety of different tasks. That inherently requires a lot of skills that I think are less prone to being automated. But there’s definitely a huge wave of automation happening right now with office work. A lot of knowledge work is going to be enhanced by these tools that can summarize knowledge, like helping people get onboarded, which I think actually will be an overall benefit to people joining our organization, understanding what the organization is doing and so on, as long as those tools are designed with the human element in mind.

BOWDOIN: What should a place like Bowdoin emphasize for its students to equip them for the world ahead?

BEANE: We are in the middle of the introduction of a general purpose technology, the equivalent of electrification or the internet. And no one knows what the right thing is to do because no one understands precisely what we’ve been handed. It certainly hasn’t been converted into useful applications. There is an excellent historical account of the introduction of the dynamo, where for the first forty years factories just swapped out a giant steam engine for a giant dynamo and kept all the infrastructure in place for connecting that dynamo via belts to remote machinery throughout the factory. It took ten or twelve years for someone to figure out you could make a small dynamo and put one on each machine and reconfigure the factory.

It just takes organizations and humanity a fairly long time to figure out what to do. And education is a kind of factory. History is going to judge who’s right or what parts of their points of view are right. Anyone who says they know may be useful to listen to but is also dangerous, I think.

Personally, I tend to be on the more optimistic, experimental, risk-tolerant side. For example, I have master’s students I’m teaching in a couple of weeks, and I just trashed a third of my curriculum and am going to make a fairly large chunk of their grade dependent on learning to code—from not knowing how to code—and coding up a web app for use to manage technical projects. Almost none of them will know how to code. Ten years ago, I learned to use Python a bit, and I haven’t touched it since. In the last two days, I started from a cold start on a MacBook Pro and am almost done with a web app that the rest of the world can use for project planning and estimating using a Monte Carlo simulation to estimate how long a project is going to take. I have no idea what I’m doing in a traditional sense, but it’s almost done.

Plenty of people have ethical concerns and are saying we shouldn’t teach any of this stuff. They’re probably also partially right. I think it’s really good that there are diverse points of view.

There’s a lot of good research that supports the idea that more complex social and critical thinking skills are really important for a career. It’s all accurate. But not engaging with AI is the new third rail. You just can’t do that. Whether you’re building physical skills and want to be a welder or want to be somebody like one of us, producing knowledge, you’ve got to engage with that tool in some way.

BOWDOIN: Allison, in your podcast you don’t shy away from diverse opinions. You have people who say AI without intervention just reinforces power structures and inequalities that currently exist. Then you have other people who say, “I understand these algorithms aren’t built for my objectives; they’re built for the company’s objectives. But if they come close to meeting mine, I’m good with it.”

Where do you think Bowdoin would best put its energies around educating people who are going to be in this era?

RYDER: You might be thinking about conversations we had with folks from Amnesty Tech at Amnesty International. It really exemplified and amplified comments I’ve heard from other people, just on a bigger scale, about, if your dataset is not complete or not holistic, you’re going to introduce bias. And in some ways it’s inevitable, but what can you do about it?

What the Amnesty folks are concerned about are things like surveillance and why it is being deployed in specific areas—facial recognition systems, what have you—and saying, “This just reinforces these really inappropriate views we have about certain demographic categories, and we’re just going to use this tool to kind of support our own biases on this.”

So, that gets really scary. When you think about what people are doing inside business, the end goal might not be super philanthropic, but if the company’s mission is to make the world something of a better place or is fueling some commercial interest, it’s not horrible. For me, mine is to teach people how to manage and lead organizations better, and I can feel good about that. But we’re also not using AI to make ourselves more profitable like a lot of organizations are—and need to.

But in terms of what you can teach, there’s a really interesting inflection point now between these biases that are inherently being built into datasets and just everything blowing up in the world around social issues. So, you have awareness about what’s important and how to be more aware of things that impact how decisions are made and you’re thinking, “Well, I don’t want to be isolating a group or targeting a group from something with the tool I’m creating”—that’s easier said than done. But I think if you’re thinking about what’s important to be a good human, be a good citizen of the world, and you take that mentality into the work you’re doing, specifically when you’re using technology, that’s going to help. That’s a very lofty thematic way to think about it.

NEAMTU: To reinforce Allison’s point, I think it’s really important to understand what are the strong points, what are the weak points of this technology? Bias in the data and bias in all the models that we’re producing is a huge, open, very hot topic right now. But it’s kind of a mirror reflection of how humanity isn’t perfect and is biased, and all of the ways we’ve implemented human systems in past centuries. That’s what’s at the core of the liberal arts—complex social issues and developing critical thinking, that kind of broader picture. I came from a particularly STEM-y high school. I could have easily gone into a program where I could have just focused on the things I find really cool, like abstract mathematics and computer science, and not really think about, well, who is this actually going to impact?

Both are really important. I think it’s great to be able to have options in terms of curriculum and be able to learn how the models work, how the systems work, have a deep understanding of that from kind of a scientific perspective but also at the same time reinforce that with the other side of critical and more socially oriented thinking.

BEANE: All day long I’m studying what sociologists would call second-order effects or unintended consequences. However Bowdoin helps students get there, I think it’s important to understand that there are unintended consequences from technology or general societal choices, and those tend to be much bigger and have greater consequence than the effect you intended to create—just getting that idea encoded instinctively. You think you’re doing something awesome, and a common outcome of something awesome is

something far more terrible.

So, just knowing that’s the story and that you have to do extra work to anticipate that—climate and environment is a nice example. You create externalities from this great new thing you’ve invented. It’s called a coal-fired engine. It takes fifty or eighty years for the unintended consequences to kick in, but boy, are they nasty. That kind of thinking is really important. You can get that lots of ways, though. You can get that by reading Chaucer. You can get that by doing philosophy. You can get that by studying anthropology. Ecology. Anything focused on complex systems.

But it’s hard for me to imagine a class now where you shouldn’t be forced to engage even with the raw, messy, untooled version of generative AI tech to try to amplify or extend your creativity—try to build something, to support it, even if it’s just reading Shakespeare. How could you use ChatGPT or some variant—Claude, say—to help you be a better reader of Shakespeare and a better writer? I’m hard pressed to think of a class where 15 percent of the curriculum shouldn’t be around the idea of “The calculator was just invented for thinking, and we have no idea what to do with it, but we’ve got to mess around with it. Otherwise, we’re doing you a massive disservice.”

There will be plenty of other people who are like, “No, the calculator is bad. We shouldn’t use this.” But I don’t know. I mean, if Bowdoin were the kind of place I would want to show up to, it would mandate that in every single class. Faculty would have no idea what they’re doing. Neither would students. And it would be messy and maybe parents would complain: “What is this experiment you are running on my child?” But the world is running the experiment without your consent right now. At high velocity. I just think it should be immediately mandatory. And a lot of forgiveness granted to everybody.

BOWDOIN: Allison, I’m going to steal an idea from your podcast and ask you each some rapid-fire questions. What is an activity that you like that doesn’t have anything to do with technology?

NEAMTU: Climbing.

BEANE: Live music. I just went to a Jacob Collier concert at the Hollywood Bowl a few days ago with my wife. It was amazing. Incredible.

RYDER: I wanted to say that too, but then I was thinking about how much tech goes into production of shows. I would feel guilty not saying time with my dogs, but I also just really like to exercise. And what I really don’t do when I exercise is rely on metrics. I don’t want a thing I have to charge and remember to bring with me and constantly look at. That actually makes it less enjoyable and feels less productive.

BOWDOIN: What’s the first career you wanted?

NEAMTU: I wanted to be a musician, be in a band.

BEANE: Same.

RYDER: On construction paper I think my mom still has: kindergarten, back-to-school night, and

it said, “I want to be when I grow up…” I said a singer and a dancer.

BOWDOIN: Such a performance mindset here with you all!

RYDER: I was really into Madonna.

BEANE: Wow.

RYDER: I had seen Dick Tracy. So, it was a very PG thing.

BOWDOIN: Last question: What do you wish AI could do that it currently cannot do?

RYDER: I wrote these questions and I don’t even —

BOWDOIN: I know. I’m sorry, I stole them all.

RYDER: No, no. It’s fine! We know our devices are listening to us all the time. Probably something Matt said in today’s conversation I’m going to get some kind of ad for in about twenty minutes. But what my device can’t do is just always meaningfully automate or auto-populate or suggest. What the automation thinks is helpful is not necessarily what I find helpful. So, just more seamless integration across my devices and my life in a way that isn’t creepy.

BEANE: I’d like to nudge us all to more capability, just by using it. Personally, I want something to design a new course for project management by a host of distributed agents. Or book the coolest new vacation after having read every post I’ve ever written and all of my email and listening to all of my voicemails. I don’t care about the invasiveness. I’m like, “Just make my life better now, please.” That feels like no more than ten years away, maybe even two. I mean, awful things could also happen. But what I’m wishing for I feel like is now actually possible.

BOWDOIN: Octo, you get the last word.

NEAMTU: Take care of my grandma.

RYDER: [Laughs] We have these selfish “Help us do our work faster and better,” “Give us good vacations” ideas, and you’re like, “Let me just take care of someone.”

Alison Bennie is editor of Bowdoin Magazine.

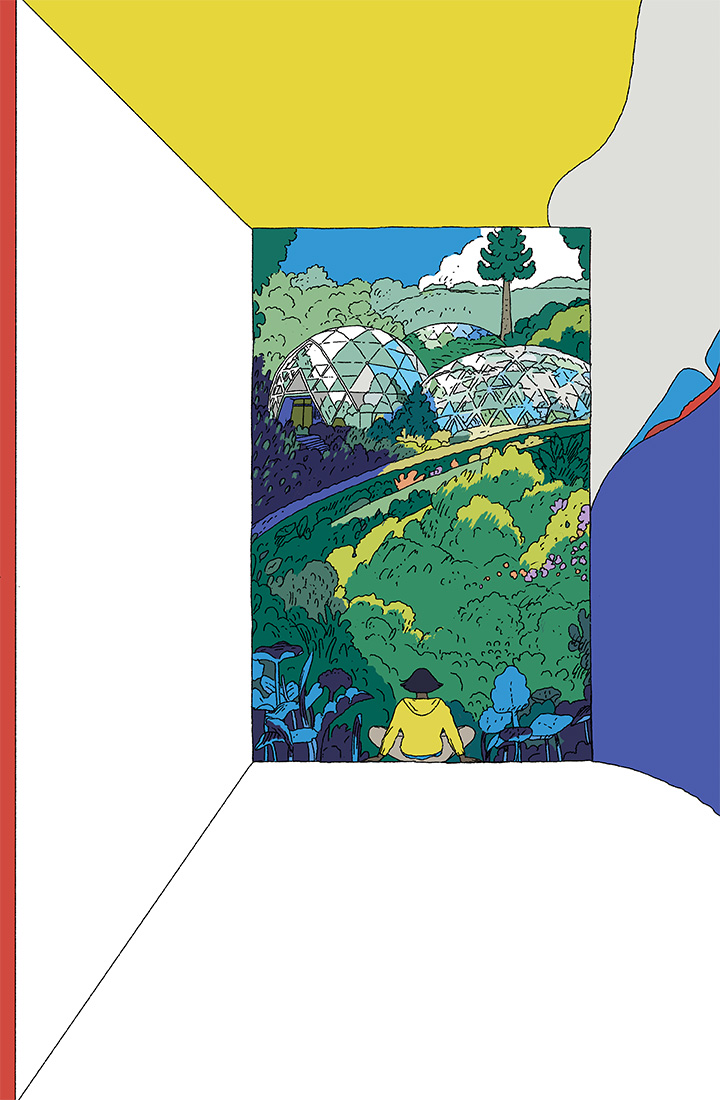

Celyn Brazier is an animation and art director and editorial illustrator based in London, England.

This story first appeared in the Fall 2023 issue of Bowdoin Magazine. Manage your subscription and see other stories from the magazine on the Bowdoin Magazine website.