Exploring the Perils, and the Misconceptions, around Artificial Intelligence

By Tom PorterArtificial intelligence (AI) has enormous potential to improve our lives in many ways, we are told. However, this exciting new field is also laced with pitfalls for academic researchers. How should they navigate the ethical challenges that come with using AI?

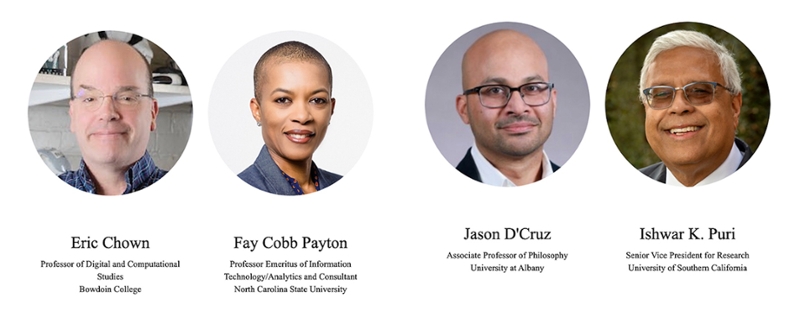

This was the question posed to four scholars from across the world of higher education in a recent virtual forum organized by The Chronicle of Higher Education. Among the featured experts was Sarah and James Bowdoin Professor of Digital and Computational Studies Eric Chown, who teaches courses in AI, cognitive architecture, and computer programming, among other things.

The panel began by discussing concerns around the field of generative AI, which refers to technology’s ability to generate realistic images, audio, and text. Some tech industry leaders recently called for a six-month moratorium on the further development of generative AI in order to allow the industry to establish some ethical protocols.

Chown said he was against the moratorium for several reasons. “One is it probably isn't feasible. A lot of people are able to do this kind of work in an unregulated way now and asking them to stop might be the right thing, but a lot of people, a lot of companies, might not be willing to.” More importantly, Chown said he thinks the proposal makes AI “seem a lot more powerful than it really is. It lends to this growing hype that any day now we're going to have artificial general intelligence, and the reality is, we're still an awful long way away from it.”

Another question addressed the issue of the training of computer scientists and the challenges of ensuring that future generations of researchers will have sufficient awareness of the ethical implications of their work. AI is increasingly regarded as one of the basic tools of academia, alongside reading, writing, and argumentation, said Chown. “We need to be teaching our students to use those tools responsibly and ethically and effectively.” (NOTE: This is an area Bowdoin College has a particular involvement in, through its participation in the Computing Ethics Narratives project, which is aimed at integrating ethics into undergraduate computer science curricula at American colleges and universities.)

“We need to be teaching our students to use [AI] tools responsibly and ethically and effectively.” Eric Chown.

The final question for the panel dealt with the loneliness and alienation that social media have caused, especially, but not exclusively, for young people. Panelists were asked how they think current AI research might help that problem or make it worse. In other words, will we be hanging out more with imaginary electronic friends, and if so, what might this do to our mental health?

“Undoubtedly people are going to be spending more time with chat bots,” responded Chown, pointing out that AI technology can now generate systems that can replicate the words and actions of deceased family members, meaning “essentially, you can recreate them. The issues there are going to be myriad,” he added. No matter how advanced the technology though, said Chown, online interactions lose something by not being in person. “For sure, those AI chat bots [are] going to have many of the good properties of other people, but they are going to lose. It'll be empty calories essentially, and I don't think in the end they're really going to help loneliness all that much.”