James Little ’19: Teaching Computers to Teach Themselves

By Rebecca Goldfine

To train a computer to do something accurately and consistently, even something as simple as detecting a ball in a field, someone must spend many hours at the often tedious task of inputting data.

Take the ball in the field scenario: To have a computer learn to locate a ball in a field, a programmer must enter many thousands of images of balls in fields, labeling and classifying all the associated data. "It would take one person two weeks of working full time to do that," Little said. "And especially if you're ab student working in an academic setting, it doesn't make sense for you to stop a project for two weeks."

Indeed, Little suggests, it makes more sense to train a computer to teach itself how to identify a ball in a picture, whittling down eighty hours to a few minutes.

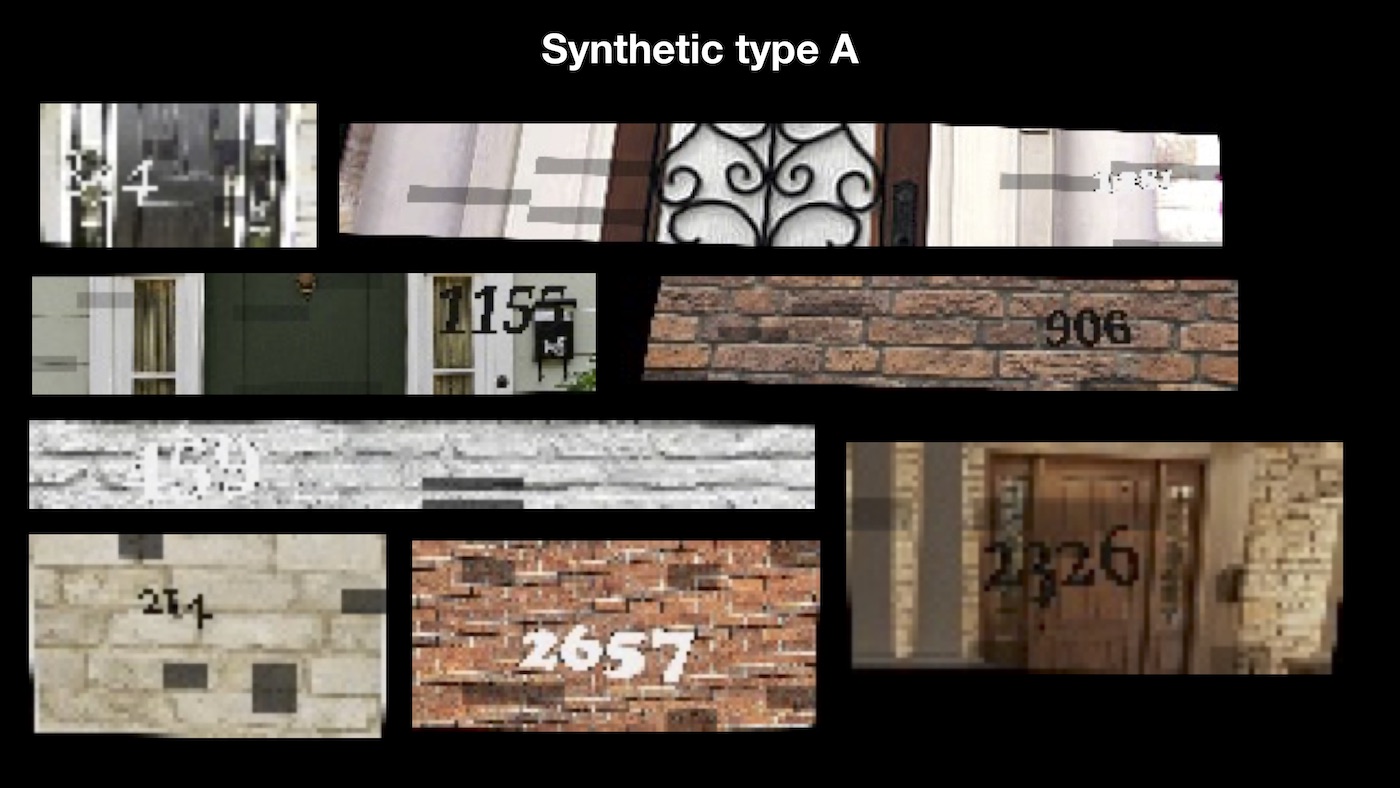

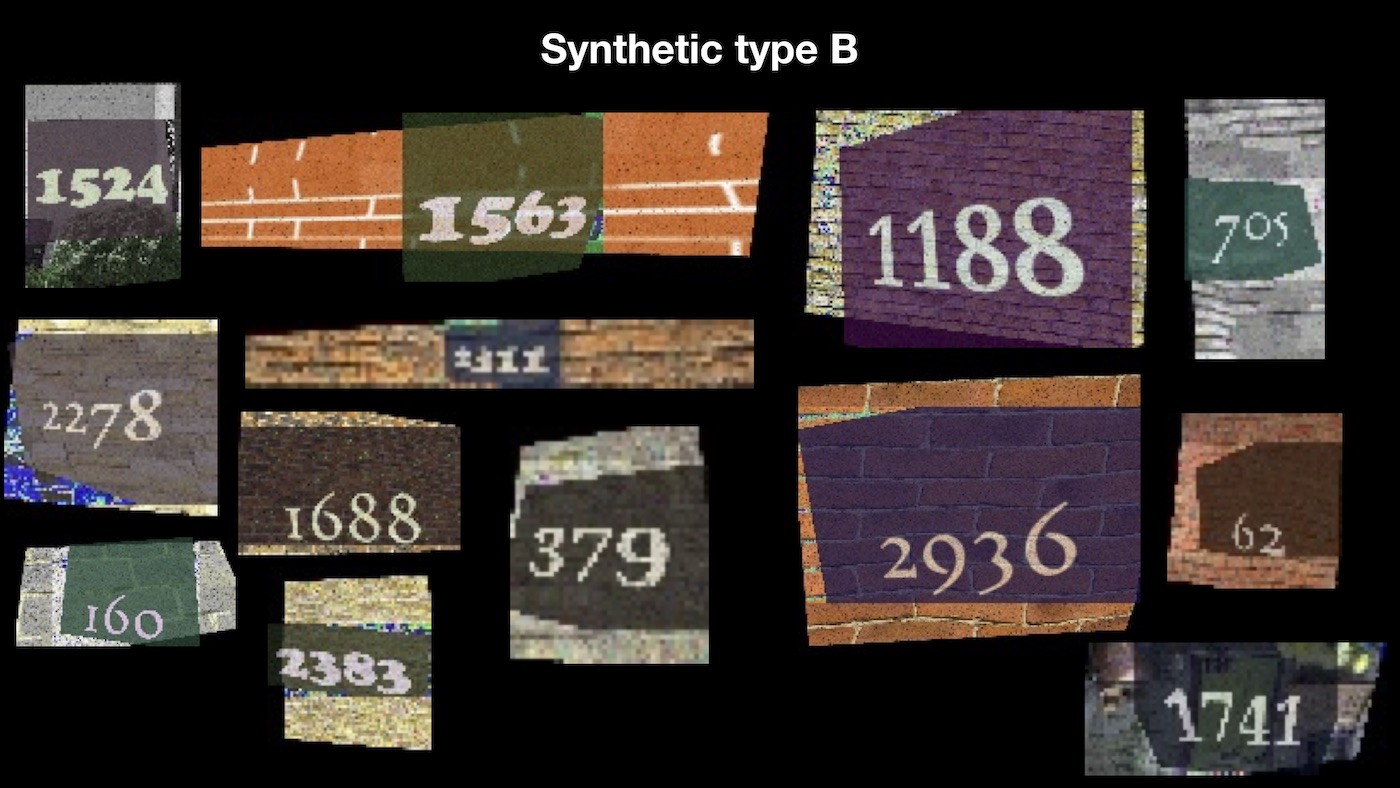

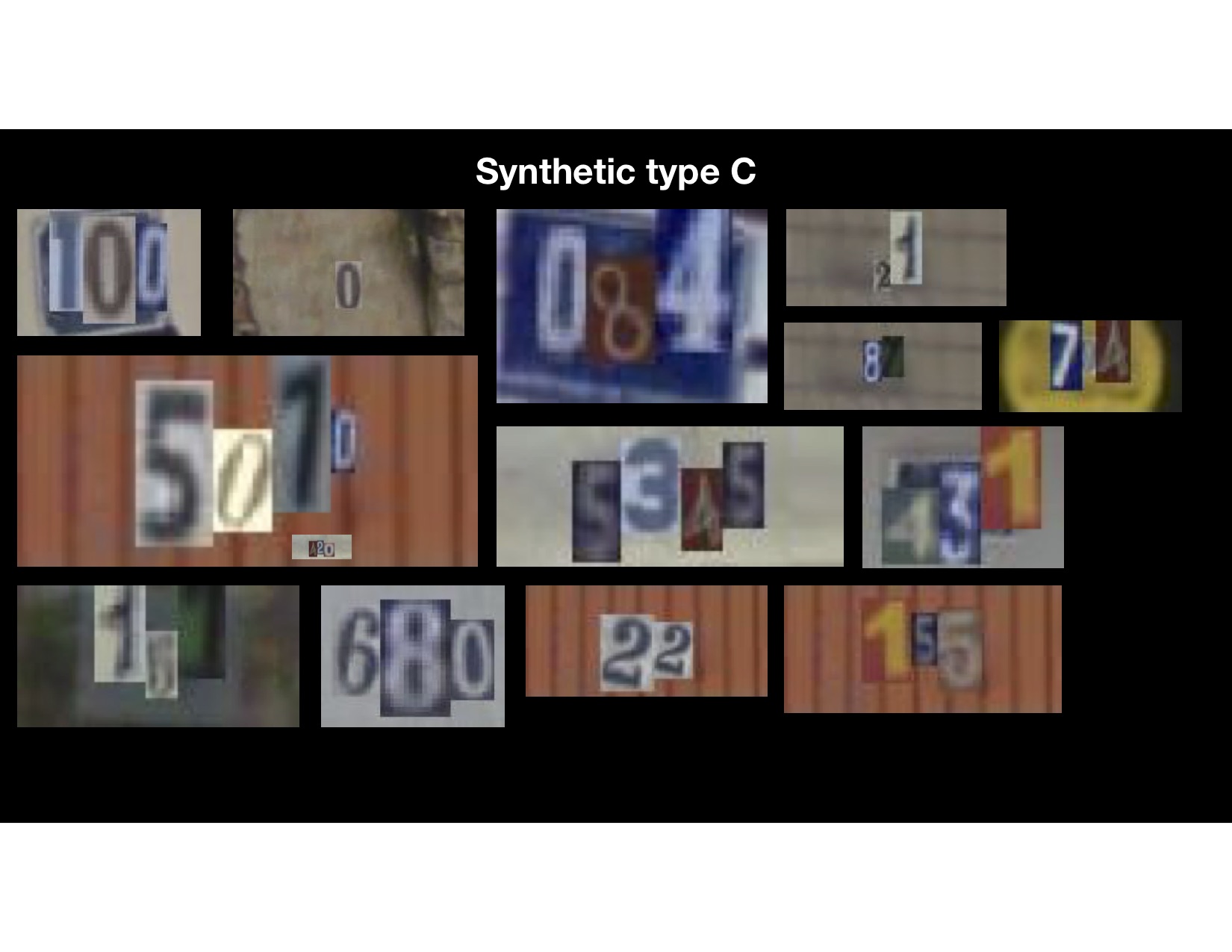

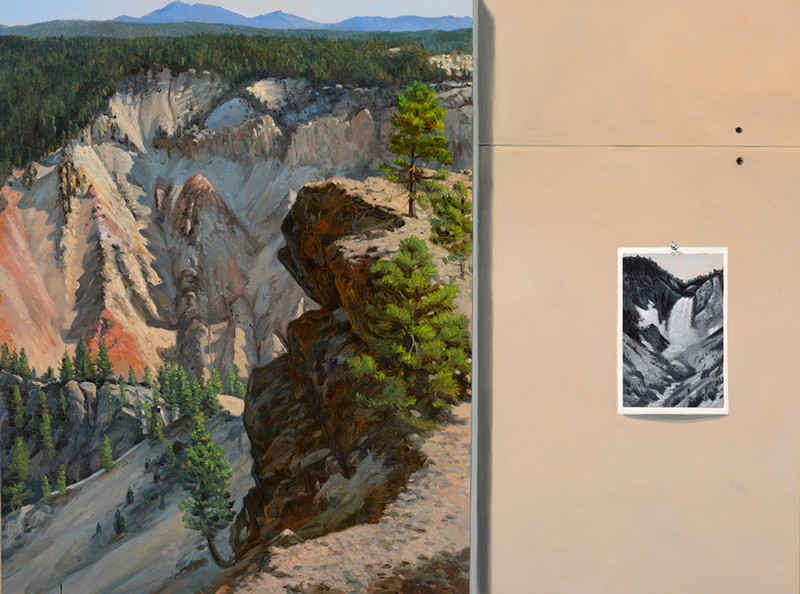

This year, Little, a computer science major and art history minor, has been working on an honors project to explore a new machine-learning method based on "synthetic data generation." The basic idea is that if you can expose a computer to a small amount of real-world data—a few "ingredients" that the computer can blend together—it can use the information to rapidly produce a large set of artificial scenes to make up its own training set.

Little's advisor, computer science professor Eric Chown, said Little's research is "hot" now, as well as substantial. "Machine learning is the single most important topic in computer science right now—see the billions of dollars being invested by Apple, Google, Tesla, Microsoft, GM, Uber, Toyota, [and Stanford University]," he said. "And the hardest thing in machine learning is getting good data."

Google's self-driving car project, for example, has been gathering data for a decade now, Chown noted, and the company has driven their cars millions of miles. "Once upon a time it was unthinkable to cheat this process by using synthetic data. But in recent years there is a growing body of evidence that this may no longer be true," Chown said.

Little said his research question is "open-ended and holistic." "Is this viable?" he asked. "What are the challenges?"

It turns out there are quite a few challenges.

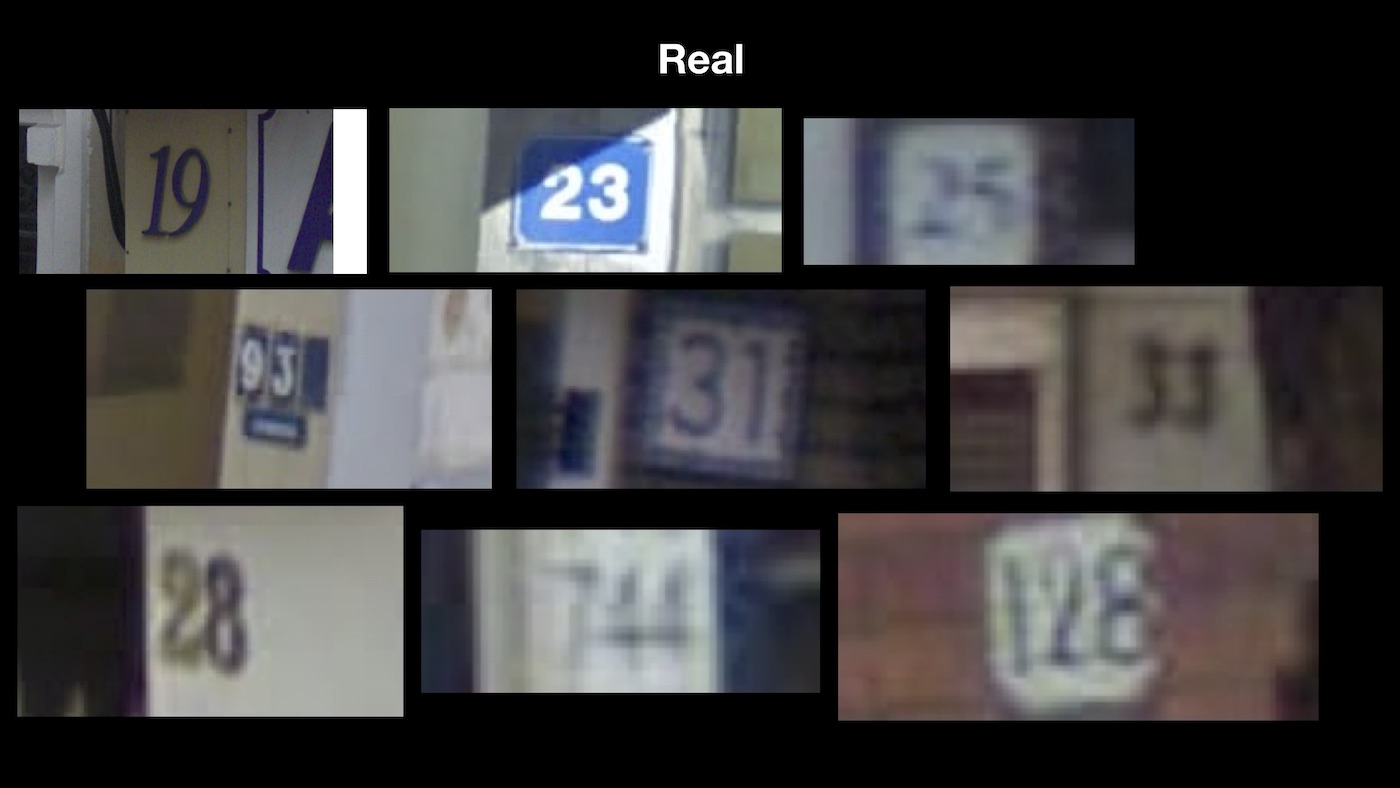

For his project, Little worked with house numbers collected from Google street view data. The address numbers appear in different colors, fonts, and sizes. In some, they are blurry. With a set of fifty cropped images, Little encouraged his computer to generate its own synthetic set of house numbers.

But the computer struggled to figure out what it was supposed to focus on, and its output was inconsistent and inaccurate.

So Little is now breaking down the components in the original photos and rebuilding them into images easier for the computer to discern. He calls this strategy "isolation-based scene generation."

"You have to figure out just what kind of data you need to feed the computer to get good results," he said. "Once those datasets get generated, they are fed as-is into the computer, and the computer crunches through those images to create a model, which hopefully, if it’s learned enough, can locate a house number in an image."

Chown said Little's project is testing the limits of synthetic data generation to see what is possible. "It is the kind of project where even if the results are negative—i.e., synthetic data doesn’t work—it is still incredibly useful for scientists trying to understand and improve machine learning."

A few of James's favorite classes

-

- Art History: Illuminated Manuscripts and Early Printed Books, with Stephen Perkinson "It was so exciting to explore the connection between books and literature in medieval times. I learned a lot about how information was learned and disseminated in the Medieval world."

- Computer Science: Foundations of Computer Systems, with Sean Barker "Peeking behind the curtain to understand how that code was being run opened up a whole new world of computer science!"

- English: Modernism, with Marilyn Reizbaum

"This course was far enough out of my wheelhouse that I worried I was going to be completely out of my element. But my professor did a fantastic job introducing the incredibly complicated subject matter and leading discussions about theories of modern literature in class."

After working for a year on machine learning, Little said that after graduating he plans to focus on his other interest in computer science: human-computer interaction design.

This summer, he starts a new job with Stripe, an online payment processor in San Francisco, working on its merchant application to improve the human-computer interaction for customers.

"I really enjoy the process of seeing something come out of nothing," he said. "It's exciting to be in this space where experts in the industry are also working to create something new."