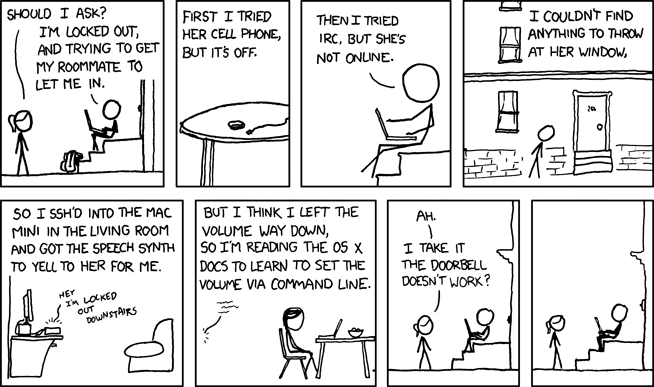

As a first step, you need to access a Unix terminal window. If you're on a Mac or Linux machine, you already have a Terminal application ready to go. On a Mac, the Terminal application is located at Macintosh HD/Applications/Utilities/Terminal. Open the terminal application, then type the following command to login to one of Bowdoin's Linux machines:

ssh userid@dover.bowdoin.edu

where userid is replaced by your Bowdoin username (e.g., sbarker). Press return, and you should be prompted for a password. Enter your Bowdoin password and you should be logged into the server dover.

If you're on Windows, you don't have a native Unix terminal, but you can login to dover using PuTTY. Install PuTTY, then connect to dover.bowdoin.edu using your Bowdoin credentials and you'll be greeted by the terminal window, which will look something like this:

sbarker@dover$The text showing in your terminal window is called the command prompt (usually ending in a % or $ depending on your system). The command prompt waits for you to enter a Unix command, which is then executed by pressing enter. Any output that the command produces will be shown below the command prompt. After the command has been executed, another command prompt is displayed so that you can enter another command, and so forth. If you are familiar with the Python shell, this is the same idea.

Before we jump into running any actual commands, we should first understand the basic structure of Unix commands. All Unix commands share the same three-part syntax, which is as follows:

program options arguments

In this framework, program is the name of the program that you are executing, options are various settings that affect how the program will behave, and arguments are the pieces of data that you hand to the program (sort of like passing arguments to a function in a program). Here is an example command that uses all three parts:

ls -a /dev

In this example, the program is named ls (which stands for "list files" and displays the files contained in some directory), a single option -a is specified, and a single argument /dev is provided. Executing this particular command will show all the files in the /dev directory, including hidden files (which is what the -a option does). Any number of options and arguments may be specified, including zero. For example, running ls by itself (with no options or arguments) will show the non-hidden files in the current directory.

By convention, options generally (but not always) start with one or two dashes. For example, two of the many flags the ls program accepts are -a or --all, which are aliases. One could specify the two options -a and -l either separately as ls -a -l /dev or combined as ls -al /dev. One could list multiple directories by specifying multiple arguments, e.g., ls /dev /tmp. Finally, some options themselves take values (which are basically like arguments that are passed to the option rather than the program itself). For example, below is a program compilation command that will be used later in this tutorial (don't worry about exactly what this command does for now):

gcc -Wall -o hello hello.c

Although it might appear that this command has two options and two arguments specified, it actually only has one argument, which is hello.c. The -o option is used to specify the filename of the compiled program that is outputted, and hello is that filename. Hence, here is an equivalent way to write the above command, that merely switches the order of the two options (which is not significant):

gcc -o hello -Wall hello.c

Here, we again have two options -o and -Wall (where the former option is given an input) as well as the one program argument hello.c.

Options that don't take any inputs (such as -a in the ls example and -Wall in the gcc example) are often called flags, since they are either set or they aren't (i.e., they're boolean inputs).

Most of the specific details above are merely conventions, and may not always be followed by particular programs (but most do). However, nearly all commands will still fit into the general program/options/arguments structure. In practice, however, there is no substitute for learning the most common types of commands through experience.

Now we're ready to start running some of our own commands!

When using the terminal, at any given point your terminal session "exists" within a specified directory. This is called your working directory. The output of many commands depends on your working directory. Initially, your working directory is your home directory, which is typically where all of your files are stored. Each user on a machine has a home directory, which is normally accessible only by that user and no others.

To see your current working directory, execute thepwd command (print working directory):

sbarker@dover$ pwd /home/sbarkerThis says that my current (home directory) is the directory called 'sbarker', located in the directory called 'home', which is one of the top-level directories on dover.

To see the files that are in your current directory, use the ls command (list files):

sbarker@dover$ lsYour home directory on

dover is actually the same as your microwave network directory, so you should see any files that you have ever saved to your microwave space.

You can also list the files in a directory other than your current directory by passing an argument to ls. For instance, try viewing the home folders of all the users on dover by listing the /home directory:

sbarker@dover$ ls /homeNote that while you can see all of the other home directories, you can't actually look inside any but your own. To see this, try to list the files inside my home directory (

/home/sbarker).

Command History: The first is accessing your command history using the arrow keys. Pressing the up arrow will autofill your terminal prompt with the last command you entered. Pressing up again will move back in time again to the 2nd most recent command you entered (and so forth). Similarly, pressing down moves forward in time in your command history. A very common scenario is either rerunning a command that you recently ran, or correcting a command that you ran but mistyped (and thus did not run correctly the first time). Don't waste your time retyping commands! Instead, use the arrow keys to access your terminal history.

Tab-completion: Another very useful feature that will help you type less is tab completion. Basically, whenever you're typing a filename or directory name (like /home/sbarker), pressing tab in the middle of entry will automatically complete as much of the name as possible with the name of a file or directory that already exists. For instance, if you type only /home/sb (as an argument to a command like ls) and then hit tab, the path will autocomplete to /home/sbarker so long as no other directories exist in /home that start with the letters "sb". Try this out. Pressing tab multiple times will display all files that match what you've currently entered, while leaving your existing command fragment intact. For example, try to autocomplete ls /home/s instead of ls /home/sb. Get in the habit of pressing tab when entering file and directory names!

cd command (change directory):

sbarker@dover$ cd /homeNow your current working directory is

/home. Try running ls again (without any arguments). Now change back to your home directory.

/home/sbarker is an absolute path.

A relative path, on the other hand, does not start with a forward slash, and refers to a pathname relative to the current working directory. So, for example, if I am currently located in /home and I try to cd to the directory named sbarker (NOT /home/sbarker), then I am saying I want to go to the directory named sbarker, located within the current working directory. Obviously, the file indicated by a relative path depends on the current working directory. In contrast, an absolute path does not depend on the current directory. If you cd to an absolute pathname, it will work the same way regardless of where you currently are in the filesystem.

In most cases, relative pathnames are used, as they usually involve less typing.

One special and important case of relative pathnames are the special directories 'dot' (.) and 'dot dot' (..). The . directory refers to the current working directory, while the .. directory refers to the parent directory of the current working directory. So, for example, if you are located in your home folder, running the following:

sbarker@dover$ cd ..Would move you to the

/home directory. If instead you ran the following (also starting from /home/sbarker):

sbarker@dover$ cd ../aliceThis would move you from

/home/sbarker to /home/alice (assuming that directory existed and that you were actually allowed to access it). You can chain together multiple .. directories to move up more than one level. For instance, if you started at /home/sbarker/documents/papers and wanted to move to /home/sbarker/downloads, you could run cd ../../downloads. Alternately, you could get there using the absolute path by writing cd /home/sbarker/downloads (which would work regardless of where you started).

cd command without any arguments to go back to your home directory, regardless of where you currently are).

Now, let's make a new directory called unixworkshop using the mkdir command (make directory):

sbarker@dover$ mkdir unixworkshopNote again that here we're using a relative path; we're saying to create a directory using the relative pathname

unixworkshop, which creates a directory in our home directory since that's where we currently are. We could've specified the same thing by writing an absolute path:

sbarker@dover$ mkdir /home/sbarker/unixworkshopbut this would've been much more typing than necessary.

Now that we have a new directory, cd into it (remember to use tab completion!) and then run pwd to verify that you're in the right place.

cp command, which has the basic form:

cp [sourcefile] [destfile]This copies the first named file to the second second named file. If the second argument is an existing file, that file is overwritten. If the second argument is not an existing file, that file is created. Try copying the existing file

/etc/nfs.conf (this is a system configuration file) to your current directory. Remember that you can use . to represent the current directory!

Now that you've made a copy of the file, let's view the contents of your new copy. A convenient utility to read a file is called less, which takes a filename as an argument and opens the file for viewing in the terminal window.

less [file]Try opening

nfs.conf using less to see what's in the file.

Inside the less interface, use j/k to scroll down/up (or you can use arrow down / arrow up, but getting used to j/k will save time). To quit and return to your command prompt, type q.

Unix has lots of commands, and many of these commands also have lots of different command line flags and options. For example, try running

Unix has lots of commands, and many of these commands also have lots of different command line flags and options. For example, try running ls with the command line flag -l (long listing), as follows:

ls -lThis will show the files in the current directory in an extended format that shows a lot more information, such as who owns the files, who has permissions to read the files, the file sizes, and when the files were last modified.

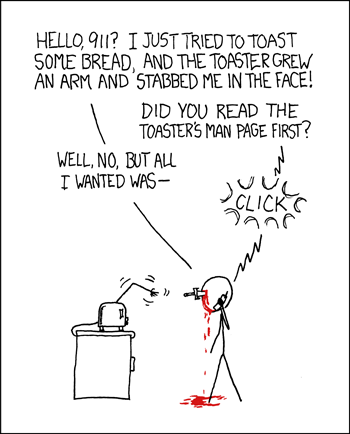

Due to the number of different commands and command-line options for many commands, it can be hard to keep track of them all! Luckily, there is a handy built-in manual that contains detailed information on how to use every individual command. To view the the manual on a particular command (which most notably, describes all the command line flags that a command accepts), use the man command, e.g.:

man lsThe manual interface behaves just like the interface for

less. If you are ever in doubt as to how to use a command, consult man!

Try this as an exercise: create another directory named dir1, copy nfs.conf into it, then copy the entire dir1 directory to dir2 (i.e., make an entire copy of the directory, including the enclosed nfs.conf) using cp. You'll need to consult the manual for cp to determine how to copy a directory using cp (hint: copying a directory and everything in it is a recursive copy)!

cp is the mv (move) command, which is called in the same way as cp but simply moves a file to the desired location (as opposed to making a copy there). This is also how you can rename a file - by simply calling mv with a different destination filename than the source filename.

mv [sourcefile] [destfile]Try renaming your

nfs.conf file to myconfig.txt using mv. Check using the ls command that you successfully renamed the file.

Finally, to delete a file, us the rm (remove) command:

rm [file-to-delete]Be very careful with the

rm command! We don't want you to lose important data!

Try deleting dir1/nfs.conf (which you should've created earlier) using rm. Now try deleting the entire dir2 directory (which includes the other copy of the file). You might need to consult man again!

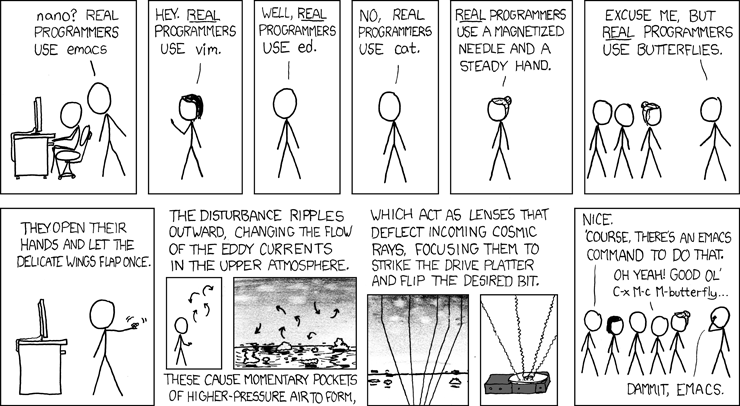

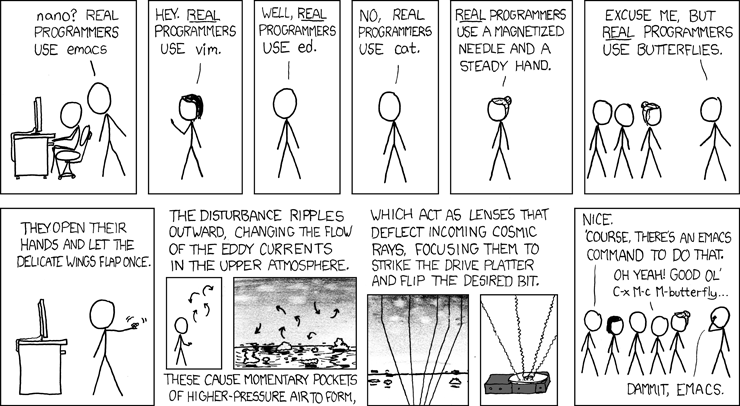

There are many command-line text editors that have various pros and cons. The two most well-known command-line editors are Vim and Emacs (this document was written entirely in Vim). Both editors are extremely powerful and efficient in the hands of experienced users. For novices, however, these editors exhibit a significant learning curve that can be frustrating. Therefore, my usual advice for complete beginners to the command line is to use Nano, which is a much simpler editor that is less likely to frustrate as you are just starting out.

To start editing a new file using nano, simply call the nano command with the desired filename of the new file to create.

nano myfile.txtYou are now in the

nano interface, and can type just as you would in any other editor. Some commands are shown along the bottom of the window; note that the caret symbol (^) refers to the control key. The most important commands in nano are ^O (control-O) to save the file and ^X (control-X) to quit nano. Nano includes many other commands for things like cutting/pasting text and searching through the file. Here is a handy cheatsheet of Nano commands.

Type some text in your new document, save the document, then quit the editor. Verify the contents of your new file with the cat command (man cat if you aren't sure how)! To open the now existing file and change it, simply run nano myfile.txt again, which will open up the existing file in the nano window (use your terminal history or tab-completion to avoid typing the filename again)!

New users of command-line editors (including simpler editors like nano) are often frustrated at the lack of mouse control and familiar shortcuts found in most graphical word processors and text editors. Channel this frustration into learning editor commands! These editors have commands to do nearly anything you might want to do, and are usually faster than using the mouse in a graphical editor. As a concrete example, novices who want to go from the end of a line of text to the beginning will often just move through the entire line one character at a time using the arrow keys. This is slow, frustrating, and a waste of time! In nano, this operation can be done in two keystrokes (control-A). In vim, this operation takes only a single keystroke! Bottom line: don't settle for doing things slowly and awkwardly. If you find yourself wasting time on editor tasks, consult a command reference, Google, or your local command-line editor guru to learn how to do it faster!

We've now covered the basics of navigating in a Unix command line environment. While there are many more commands than those described above, these should be enough to get you started working with the command line.

Before starting the next part, cd back to your home directory. You can also clear the contents of the terminal window using the clear command.

nano editor is fine to start out with, but I don't recommend using it long-term, as it is quite basic and likely to ultimately limit you with its lack of features. Once you have gotten your bearings in the Unix environment (perhaps after spending a few weeks or months using nano), I strongly encourage you to graduate to either vim (my personal preference) or emacs. It is well worth your time to become proficient in one of these editors, and the more time you spend using them, the more efficient you will become.

Luckily, both Vim and Emacs include built-in tutorial modes. To run the Vim tutorial, run the vimtutor command. To run the Emacs tutorial, start the editor by running emacs, then type Control-h followed by t to start the tutorial. I am a fairly knowledgeable vim reference and am happy to answer questions, while others in the department (but likely not I) can speak to emacs usage.

Of course, if you'd rather just skip Nano and start with a more robust editor immediately, feel free! There's no particular need to work with Nano initially, other than reducing the overall learning curve as you get used to working in the command-line environment.

Now let's try compiling and running a C program using the command line. First, in your home directory, create a new directory called

Now let's try compiling and running a C program using the command line. First, in your home directory, create a new directory called myprogram. Cd into that directory and create a new file called hello.c. Type the following Hello World program into your new file:

#include <stdio.h>

int main() {

printf("Hello World!\n");

return 0;

}

Now let's compile the program by calling the standard C compiler program, gcc:

gcc -Wall -o hello hello.cThe

gcc command takes a list of source files to compile (in this case, just hello.c) and outputs the compiled executable (or a list of errors if the program does not compile). In the above command, we are passing two flags in addition to the filename: -Wall (Warnings: all) says to turn on all compiler warnings, and -o hello says we want the output executable file to be named hello. If we omit the -o hello option, then gcc defaults to producing an executable named a.out (not very informative).

Note that if we are writing C++ code instead of plain C code, everything is exactly the same except that we use the g++ command instead of gcc.

To run the compiled executable, we run the executable name as a command:

./helloNote that the

./ indicates that we're running a program located in the current directory.

-Wall flag. This flag essentially instructs the compiler to give you as much programming feedback as possible, and will often output warnings about things that aren't strictly wrong, but are still symptoms of bugs in your code. Let the compiler help you as much as it can! If you use a Makefile (see below), then you can easily automate including this flag so you don't have to worry about forgetting it.

Also, get in the habit of treating all warnings like errors, even if the warnings only appear when using the -Wall flag and not without. Warnings are very often symptoms of bugs, and even if they aren't, they're usually an indication of bad programming style or gaps in understanding. Never be satisfied by a program that produces warnings, even if it compiles in spite of them! Fix all warnings and only then try running the program.

-Wall, which you should never do). As our programs get more complicated and involve multiple source files, the compilation command gets longer and this problem gets worse. Since our objective in using the terminal is to be Makefile. This is a file used by the program make that says how to compile your program.

Create a file called Makefile alongside your source file and input the example contents below.

CC = gcc

CFLAGS = -Wall

hello: hello.c

$(CC) $(CFLAGS) -o $@ hello.c

clean:

rm -f hello

Note that the two indented lines must be indented with actual tabs (not spaces). If you copy and pasted from above, you'll likely need to fix this.

A Makefile consists of a set of targets, each of which tells make how to

do something. The example above contains two targets: hello, which says how to

compile the hello executable, and clean, which says how to clean up after

the compiler by deleting generated files (in this case, just the hello executable).

Each target may specify a set of dependencies, which are filenames and/or other target

names that appear on the same line as the target name after the colon. In this example, the hello

target has one dependency (the hello.c file) and the clean target has no dependencies.

Additionally, each target may specify a set of zero or more commands to execute when the

target is invoked. In this example, both targets specify a single command to run: hello

runs gcc to compile the program, and clean runs rm to clean up. Note

the use of three variables in this Makefile: $(CC) and $(CFLAGS)

are defined in the Makefile itself (on the first two lines), and $@ is a special built-in

Makefile variable that evaluates to the name of the current target (which in this case is hello).

The Makefile specifies what happens when targets are invoked, but a user still has to actually invoke the target. Invoking a target is simple:

make [targetname]In this example, running

make hello would invoke GCC to compile the program, and running make clean

would delete the compiled executable (if it exists). If you run make by itself without any target name,

make defaults to building the first target (which is hello in this case). Most often,

real programs with Makefiles are built simply by calling make to build the first target

(which usually compiles the entire program). This convention avoids requiring the user

to know anything about the target names. There are also some common, idiomatic target names

(e.g., most Makefiles have a clean target that deletes all temporary/generated files, such as in

this example).

One of the reasons make is useful is that it only compiles files when it actually needs to -- i.e., only if the corresponding source files have actually changed since the last compilation. This is the significance of the hello.c dependency specified for the first target and is one of the ways that make uses dependencies.

To see this process in action, run make clean to delete the existing executable (if it exists), then run make to build the hello executable. What will happen if you run make again? Try it out to check. Now, edit your source code (say by changing the message that prints) and then run make again.

This is the basic idea behind Makefiles -- rather than having to repeatedly type long compilation commands, we just type make to compile the program and don't have to think about it. For large projects with many files and dependencies, Makefiles can get very complicated and scary looking. Focus on producing simple Makefiles that do what you need and try not to get bogged down in the many advanced features of make (if you're up for some light reading, here is the make online documentation).

make and then executing the program to test. If you are doing this all in one terminal window, this is very time consuming, since you are constantly having to quit your editor to rebuild and run, then going back into the editor to make more changes.

Instead, you will have a much easier time if you open up a second terminal window (command-N on a Mac). Whenever you open up a new Terminal window, you will be located in the home folder of the local machine, so you will need to use ssh again as described in the beginning of this tutorial to log back into dover. You can then use one terminal window to keep your source code open in the editor, and the other terminal window to compile and run your code. Note that each terminal window is independent and has its own working directory, so remember you'll need to cd to the appropriate location when you open the second window.

Multiple terminal windows in general let you avoid switching back and forth between servers, working directories, etc as much, so be liberal in opening new windows. As an example, I presently have 8 different terminal windows open on my machine, in various locations and editing various files (okay, that may be an extreme example, but it illustrates the point!)

foo.txt to the file foo2.txt:

cp foo.txt foo2.txtHere, the name of the program is

cp, while foo.txt and foo2.txt are command-line arguments. The cp program is given these arguments and acts accordingly. Most programs expect command-line arguments to run, and may behave differently depending on how many arguments are given. For example, the normal use of the cd command is with one argument specifying the directory to change to, but you can also call cd without any arguments, which will change to your home directory regardless of your current working directory.

Many of the command-line programs that you write will also use command-line arguments. A program can read the arguments that it's passed using the argc and argv parameters to the main function that you may recognize. Here is an example of a C program that prints out the number of arguments that it is given and then prints out the first such argument (if it exists):

#include <stdio.h>

// a test of command-line arguments

int main(int argc, char** argv) {

printf("program was called with %d arguments\n", argc - 1);

if (argc > 1) {

printf("the first argument is %s\n", argv[1]);

}

return 0;

}

Save this program as argtest.c and compile it with gcc (remember to use -Wall and -o to specify the name of the compiled executable, such as in gcc -Wall -o somename argtest.c, which would name the compiled program somename). Often, for a source file like prog.c, the compiled program is named prog, but the compiled prog executable is a distinct file from the source file prog.c (the latter is just a text file and cannot be executed).

In the following example, I'll assume that the compiled program is named argtest. Here is an example of executing the argtest program (again, we are running the exectuable, not the source code file):

sbarker@dover$ ./argtest bowdoin computer science program was called with 3 arguments the first argument is bowdoinThis program demonstrates the behavior of the main function parameters:

argc is the number of command line arguments passed to the program, and argv is the arguments themselves. If you haven't used C before, you are likely not familiar with the ** syntax, as in char** argv. Don't worry about this for now; all you need to know is that argv is essentially an array of strings, which you can access with the usual array notation (e.g., argv[0] is the first command line argument).

You may have noticed something odd in the above program: namely, that we printed argc - 1 and argv[1] instead of argc and argv[0], respectively. The reason for this is that the first command line argument is defined to be the name of the program itself. For example, argv[0] in the above example would be argtest, not bowdoin. Note that this means that a program will never have an argc value of zero, since the name of the executing program will always be available as argv[0]. In the example usage above, the actual value of argc is 4 (rather than 3). Since we don't really think of the name of the program as a true command-line argument, however, the program is excluding it when printing the number of arguments and the 'first' argument.

While the argc and argv parameters of the main function are optional (e.g., we did not include them in the earlier Hello World example), you must include them in your definition of main if your program needs to make use of command-line arguments.

If you are writing code on your own machine (e.g., using an IDE like Xcode or Eclipse) but ultimately need to run on a Linux machine (e.g., on dover), then you will need to copy your files over from your local machine to the Linux machine once you are done developing so that you can test/submit/etc. To copy files from your local machine to dover, you would use the scp (secure copy) command. To copy a file called myprogram.cc (located on your local machine) to unixworkshop/copied.cc (located in your home directory on dover), you would run the following on your local machine:

scp myprogram.cc userid@dover.bowdoin.edu:unixworkshop/copied.ccRemember to substitute your real Bowdoin username for

userid.

The basic form of the scp command is this:

scp [sourcefile] [destfile]where each of the source file and destination file is either a local filename (e.g.,

myprogram.cc in the above example) or a remote filename (e.g., unixworkshop/copied.cc located on the remote server dover.bowdoin.edu in the above example). Try copying a file to dover in the above way using scp.

You can also copy files back from a remote machine to your local machine in the same way by flipping the order of arguments:

scp userid@dover.bowdoin.edu:somefile.txt .The above would copy

somefile.txt located on dover to the current directory on the local machine.

We could equivalently write the above by specifying the full destination pathname as opposed to just the directory:

scp userid@dover.bowdoin.edu:somefile.txt ./somefile.txtIf you omit the filename when copying and just provide the directory, the copied file will retain the same filename as the original.

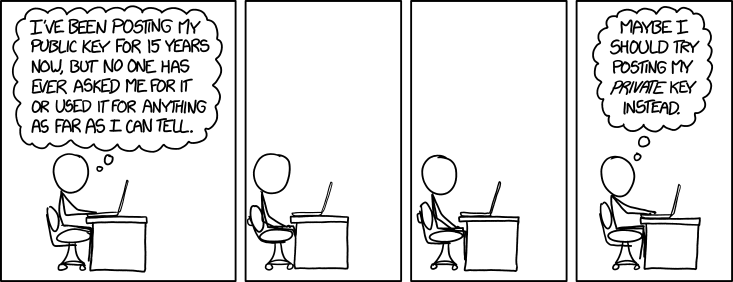

Your key (or 'keypair', as it's usually called) actually consists of two files - a private key (often named something like username-keypair.pem) and a corresponding public key (often named something like username-keypair.pub). The public key is not sensitive, and is also stored on the server as a way to identify your account. The private key, on the other hand, is the file that actually authenticates you, and must be kept private.

Keep your private key safe, as it provides access to your server account without a password. Don't send anyone your private key file or leave it anywhere publicly accessible - this is like writing your password on a post-it note!

You can either create a keypair and install it on a server that you already have access to (though doing so is beyond the scope of this tutorial), or you can use a keypair that's already been provided to you to login to a preconfigured server. Assuming you already have a keypair such as named above, you can use it to login to a server that's configured to accept your key (example.bowdoin.edu in this example) like so (remember to substitute your actual username and key file name):

ssh -i username-keypair.pem username@example.bowdoin.eduNote that your keypair must be present in the current directory when running this command. Assuming your keypair is accessible, this command will login to the server without prompting you for a password.

If you get an error that references the permissions on your keypair file, you can fix that using the chmod command, like so:

chmod 600 username-keypair*Then try running

ssh again.

While SSH logins using public keys are more convenient than using passwords, remember that they only work if the server is configured to accept your public key. This configuration is very doable but beyond the scope of this tutorial.

First, you will need to convert your keyfile into a form understood by PuTTY (this is a one-time step). To do this, start puttygen (which is a program installed alongside PuTTY itself), click on Conversions, then 'Import key', then browse to and select your private key file. Save your converted private key file.

Now you can use your converted key file to login using PuTTY. Within PuTTY, go to Connection, then SSH, then Auth, then click Browse next to 'Private key file for authentication'. Change the 'Files of type' setting to 'All files', then select your converted private key file. Now you should be able to login in the usual way to the server and your private key file will be used.

wget. Note that some systems do not have wget installed -- if this is the case, you can use curl instead, which is similar (check the manpage, as usual)! Here is an example of downloading my homepage using wget:

wget https://www.bowdoin.edu/~sbarker/Decompressing archives: A popular type of file archive used in Unix-land is a tarball, which is a type of compressed archive that ends in

.tar.gz (or sometimes just .tgz). When you download software for Unix, it is likely to be in a tarball format. Let's look at how we can decompress a tarball at the command line. First let's download a tarball that I've placed on my website:

wget https://www.bowdoin.edu/~sbarker/unix/unixfiles.tar.gzThis downloads a single file called

unixfiles.tar.gz that can be expanded into all the files actually contained within the archive. To do this, we use the tar (tape archiver) command:

tar xzvf unixfiles.tar.gzThe

tar command is another general purpose utility that can be used either to make new tarballs or extract existing tarballs. In the above idiomatic usage, we are saying to extract the archive (flag x), and simultaneously decompress it (flag z), showing verbose output (i.e., extra information about what the command is doing -- flag v), and finally specifying the archive filename (flag f followed by the archive name). After running this command, you will have an extracted directory containing all the original files that were packaged into the tarball.